How ClusterIP Services Work in Kubernetes

https://twitter.com/danielepolencic/status/1617493269939642368

🪄Smart summary:

This thread explains how Kubernetes Services work. Services exist only in etcd, and there is no process listening on the Service’s IP address and port. Instead, traffic to the Service’s IP is intercepted by iptables rules, which know to forward the traffic to one of the Pods belonging to the Service. Kube-proxy is responsible for configuring the iptables rules.

——-

Have you ever tried to ping a Service IP address in Kubernetes?

You might have noticed that it doesn’t work

Unless it just works

Confusing, I know

Let me explain

1/

Kubernetes Services exist only in etcd

There’s no process listening on the IP address and port of the Service

Try to execute `netstat -ntlp` in a node — there’s nothing

How do they work, then?

2/

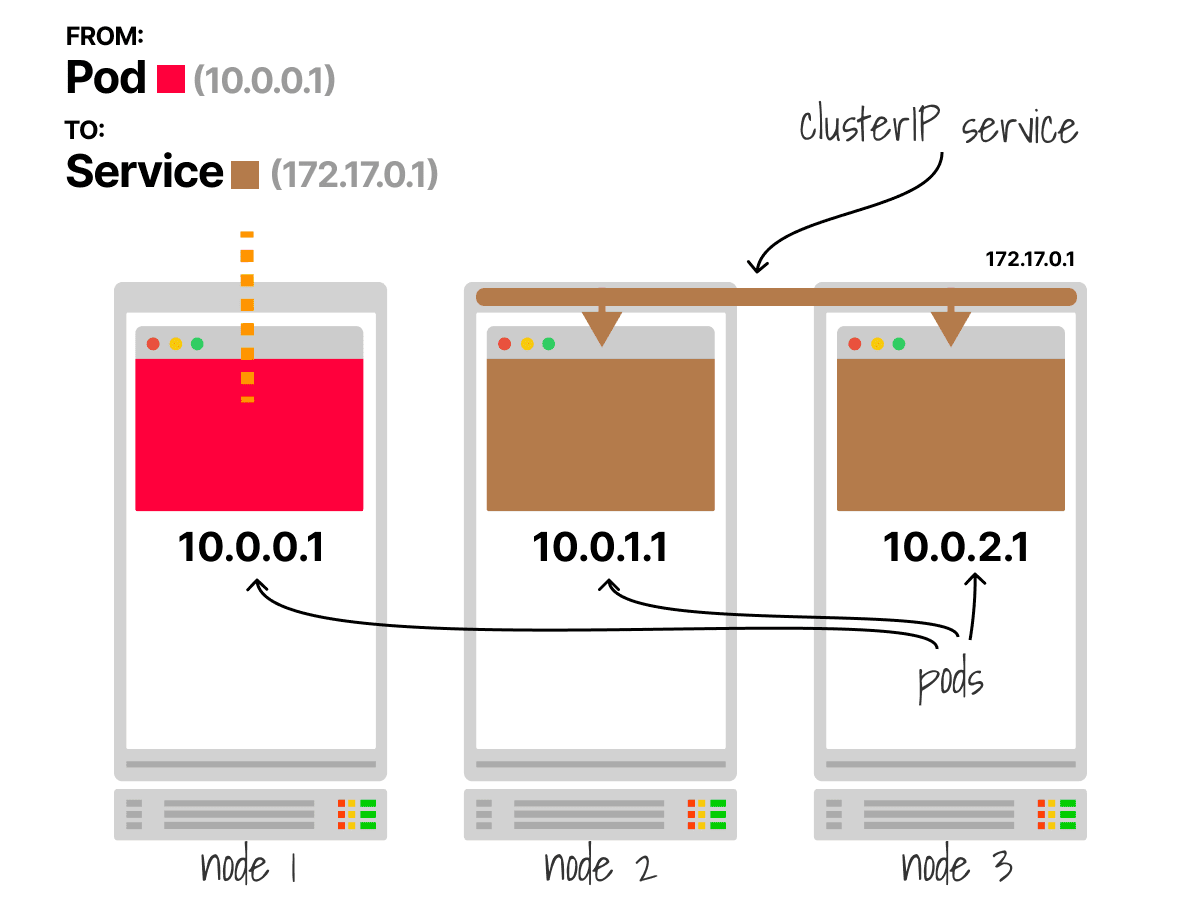

Consider a cluster with three Nodes

The red pod issues a request to the brown service using the IP `172.17.0.1`

3/

But Services don’t exist, and their IP address is only virtual

How does the traffic reach one of the pods?

4/

Kubernetes uses a very clever trick

Before the request exits from the node, it is intercepted by iptables rules

5/

The iptables rules know that the Service doesn’t exist and proceed to replace the IP address of the Service with one of the IP addresses of the Pods belonging to that Service

6/

The destination is a pod IP address, and since Kubernetes guarantees that any pod can talk to any other pod in the cluster, the traffic can flow to the brown pod

7/

Who is configuring those iptables rules?

It’s kube-proxy that collects endpoints from the control plane and maps service IP addresses to pod IPs

(It also load balances the connections)

8/

Kube-proxy is a DaemonSet that listens to changes to the Kubernetes API

Let’s have a look at how it works

9/

Let’s observe what happens when you create a ClusterIP service

10/

A fixed virtual IP address is allocated in the control plane, and a companion Endpoint object is created

The endpoint contains a list of IP addresses and ports where the traffic should be forwarded

11/

Kube-proxy subscribes to changes to the control plane

For every endpoint addition, deletion or update, it is notified

There’s a new Service (and Endpoint object) in this case

12/

Kube-proxy proceeds to update its node with a new list of iptables rules

Since there’s a kube-proxy for every node in the cluster, each of them will go through the same process

In the end, the service is “ready”

13/

This explains how Service doesn’t exist and how kube-proxy sets up load balancing rules on every node but doesn’t answer why (sometimes) you can’t ping a Service

14/

The answer is simple: there’s no rule in iptables for ICMP traffic

So iptables skips the packets

But since the Service IP is virtual (it doesn’t exist anywhere but etcd), the traffic is not intercepted and goes nowhere

15/

So why does it work on my cluster?

iptables is not the only mechanism to implement a ClusterIP service

Other options include technologies such as IPVS and eBPF, which might behave differently (depending on which product you use)

16/

This is just the tip of the iceberg

You can find a more extensive explanation (that includes things like conntrack and Linux namespaces) here: https://t.co/v6IWArnbs6

17/

And finally, if you’ve enjoyed this thread, you might also like the Kubernetes workshops that we run at Learnk8s https://t.co/uSESD5D4ft or this collection of past Twitter threads https://t.co/ru6oWdO9SP